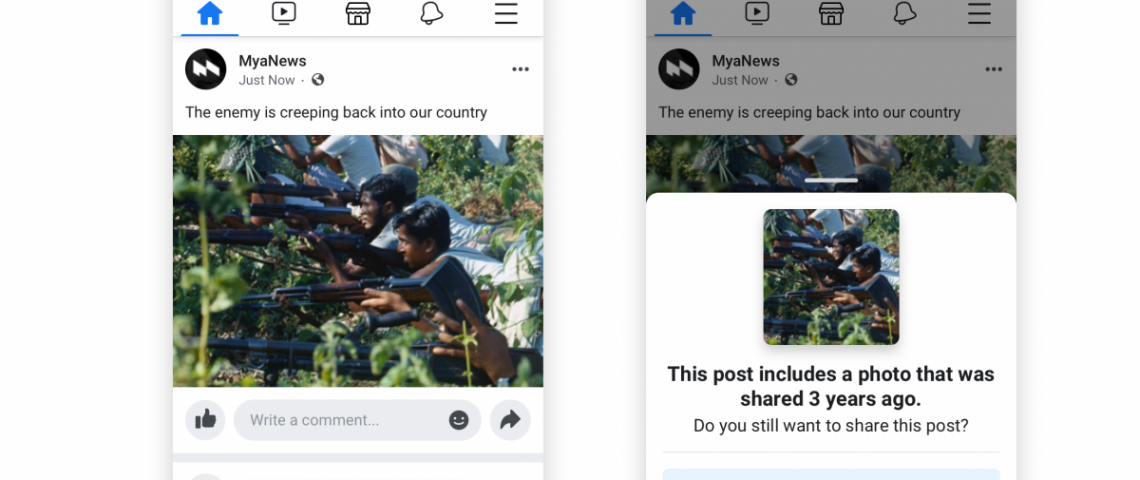

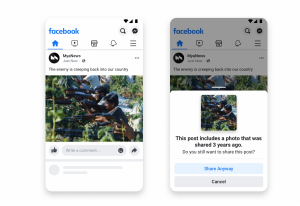

Facebook has launched a new product in Pakistan to help limit the spread of misinformation and to provide people using the platform with additional context before they share images that are more than a year old and that could be potentially harmful or misleading.

“We’re empowering people to detect misleading content by giving them more context so that they can decide for themselves whether to share the images or not,” a Facebook company spokesperson.

Users in Pakistan will be shown a message when they attempt to share specific types of images, including photos that are over a year old and that may come close to violating Facebook’s guidelines on violent content. Interstitials warning users that the image they are about to share could be harmful or misleading will be triggered using a combination of artificial intelligence and human review.

Photo and video-based misinformation has become an increasing challenge around the world and is something that the teams at Facebook have been focused on addressing.

This new, out of context images product launch is part of Facebook’s three-pronged approach to prevent the spread of misinformation: ‘Remove, Reduce, Inform’.

Facebook removes content that violates its Community Standards and it also reduces the distribution of false news when it’s marked as false by third-party fact-checking partners. This reduces the visibility of this kind of news by up to 80 percent.

In Pakistan, Facebook works with Poynter-certified AFP to fact-check news. Creating a more informed public is also important and Facebook is also focused on creating a community in Pakistan that has the skills to recognize false news and knows what sources to trust.